Revolutionizing AI performance through radical co-design.

We make principled design decisions on all levels of the stack, lowering the abstraction between neural networks and their implementation in silicon.

Our commitment to co-design ensures unprecedented levels of performance, and through our research we aim to set a new standard in AI compute.

Digital In-Memory Compute

AI workloads possess extraordinary compute and memory demands, and they are often limited by legacy computer architectures. Rain AI is pioneering the Digital In-Memory Computing (D-IMC) paradigm to address these inefficiencies to refine AI processing, data movement and data storage.

Unlike traditional In-Memory Computing designs, Rain AI’s proprietary D-IMC cores are scalable to high-volume production and support training and inference. When combined with Rain AI's propriety quantization algorithms, the accelerator maintains FP32 accuracy.

Result: record compute efficiency

Numerics

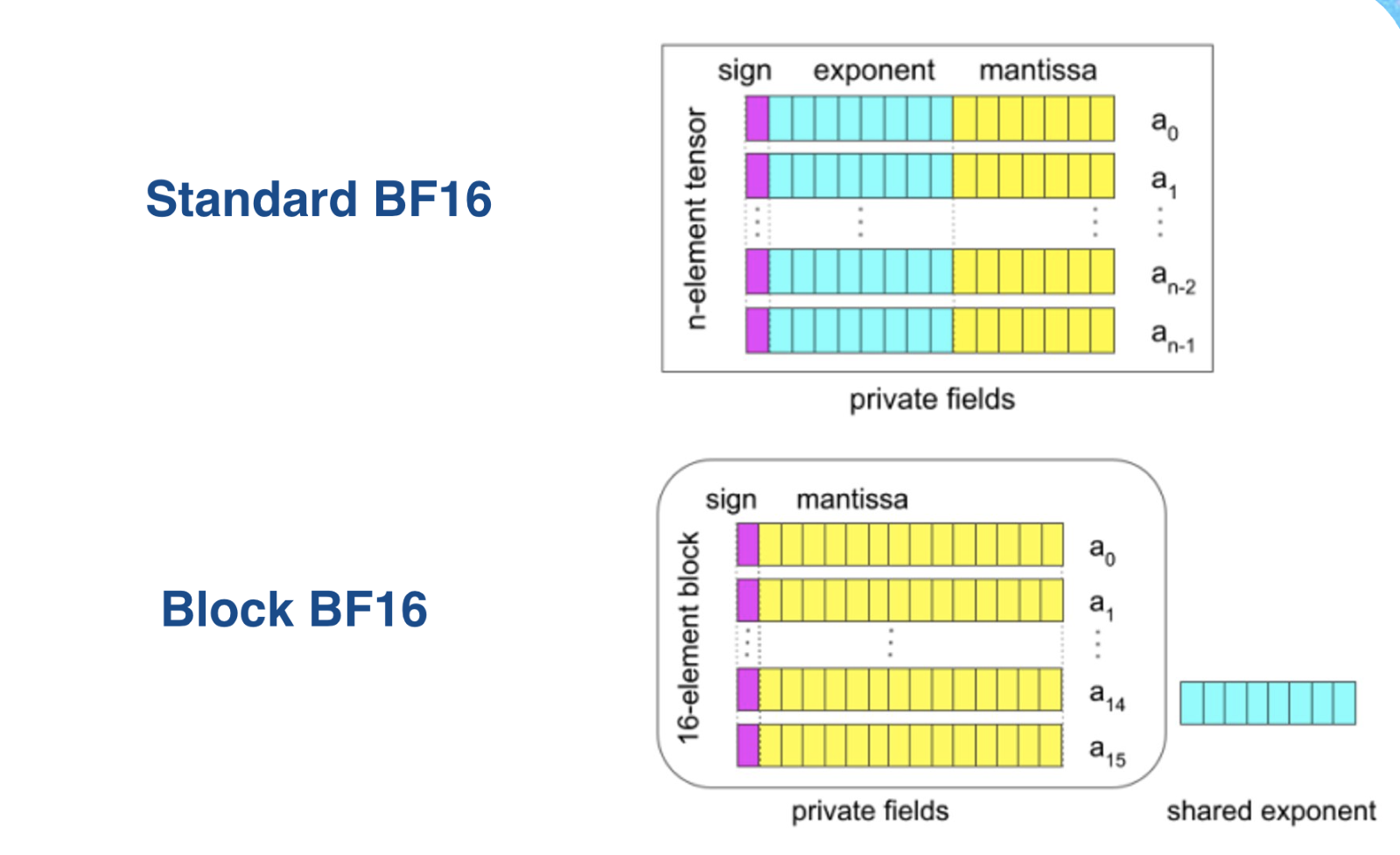

Reaping the benefits of high-accuracy, AI-focused numerics in hardware remains a core challenge in AI training and inference. Rain AI’s block brain floating point scheme ensures no accuracy loss compared to FP32. The numerical formats are co-designed at the circuit level with our D-IMC core, leveraging the immense performance gains of optimized 4-bit and 8-bit matrix multiplication. Our flexible approach ensures broad applicability across diverse networks, setting a new standard in AI efficiency.

Result: no accuracy loss compared to FP32

https://proceedings.mlr.press/v162/yeh22a/yeh22a.pdf

RISC-V

AI accelerators often fail to compile workloads as a result of lack of hardware support. Rain AI harnesses the power of the RISC-V ISA, allowing AI developers unparalleled flexibility to implement any operator and compile any model. Rain has developed a proprietary interconnect between RISC-V and D-IMC cores, offering superior performance through a balanced pipeline.

Result: high performance and broad reprogrammability on any operator.

On-device fine-tuning

AI models often fail upon deployment due to the inevitable mismatch in training and deployment environments. Fine-tuning solves this problem but requires devices to support high-performance training. Rain AI is co-designing fine-tuning algorithms (e.g., LORA) with hardware to facilitate efficient real-time training.

Result: Improve AI accuracy by >10% in realistic deployment environments

https://arxiv.org/pdf/2307.15063.pdf